Hybrid data environments are no longer a transitional phase—they are the steady state for large enterprises. Hybrid Data Solutions with Microsoft Fabric and Azure address a reality where critical data continues to live across on-premises systems, edge platforms, and multiple cloud services, even as organizations accelerate AI and analytics adoption.

For CTOs and CDOs, the challenge is not whether hybrid will exist, but how to make it governable, performant, and economically sustainable. Fragmented pipelines, inconsistent security models, and duplicated analytics stacks often erode trust and slow decision-making.

Hybrid Data Solutions with Microsoft Fabric and Azure fundamentally changes this equation. By combining a unified lakehouse architecture, integrated analytics experiences, and deep Azure interoperability, Fabric enables enterprises to design hybrid data solutions that feel cohesive rather than stitched together.

This guide explores hybrid data solutions with Microsoft Fabric and Azure from an enterprise architecture perspective—covering why hybrid matters in 2026, how Fabric and Azure work together, proven deployment patterns, and best practices for governance, performance, and cost. The focus is strategic and practical: how to design hybrid analytics platforms that scale with business ambition, not technical debt.

Related insight: Read our blog that explores how AI copilots for enterprises are transforming executive leadership in 2026.

TL;DR — Executive Summary

- Hybrid data solutions with Microsoft Fabric and Azure allow enterprises to modernize analytics without abandoning on-prem or edge systems.

- Microsoft Fabric’s OneLake, combined with Azure integration services, enables a unified lakehouse across hybrid environments.

- Successful hybrid architectures require intentional design across ingestion, governance, security, performance, and cost control.

- Enterprises that treat Hybrid Data Solutions with Microsoft Fabric and Azure as a strategic operating model—not a temporary compromise—achieve faster modernization and lower risk.

Why hybrid data architectures matter for modern enterprises

Hybrid data architectures persist because enterprise realities persist. Despite aggressive cloud adoption, core operational systems, regulatory constraints, and latency-sensitive workloads ensure that data estates remain distributed. In 2026, hybrid is not a compromise—it is an intentional architecture choice.

Business drivers: low latency, sovereignty and cost optimisation

Several structural drivers keep enterprises anchored to hybrid models:

First, latency and operational proximity matter. Manufacturing execution systems, financial trading platforms, healthcare devices, and retail edge systems generate data that must be processed close to where it is created. Shipping everything to the cloud introduces unacceptable delays and operational risk.

Second, data sovereignty and regulatory compliance continue to tighten. Regional regulations increasingly dictate where sensitive data can be stored and processed. Hybrid architectures allow enterprises to localize regulated data while still enabling centralized analytics through governed abstractions. This approach aligns closely with modern data governance frameworks.

Third, cost optimization plays a larger role than many cloud-first narratives admit. Large data volumes, historical datasets, and infrequently accessed records are often cheaper to retain on-prem or in lower-cost tiers while still participating in enterprise analytics through hybrid lakehouse patterns.

Hybrid data solutions with Microsoft Fabric and Azure allow organizations to balance these forces without fragmenting analytics experiences or governance controls.

Related insight: Begin your journey by learning more about our partnership with Microsoft to help you make the right choice for MS Fabric adoption partner.

Common hybrid data challenges: connectivity, freshness and trust

While hybrid architectures are necessary, they introduce non-trivial challenges:

Connectivity fragility is a common failure point. VPNs, gateways, and custom connectors often become brittle dependencies that disrupt analytics pipelines during outages or maintenance windows.

Data freshness and synchronization also suffer. Without disciplined ingestion patterns—such as CDC, incremental loads, or event-driven pipelines—hybrid environments drift out of sync, eroding confidence in reports and AI models.

Finally, trust and governance breakdowns occur when hybrid data estates evolve faster than policies. Disconnected security models, inconsistent lineage, and unclear ownership undermine executive confidence in analytics outputs. These issues are frequently cited as barriers to AI readiness in hybrid enterprises.

| Challenge | Impact | Business Risk |

| Fragile connectivity | Pipeline failures | Decision delays |

| Stale data | Conflicting reports | Loss of trust |

| Fragmented governance | Audit gaps | Compliance exposure |

Hybrid data solutions with Microsoft Fabric and Azure address these challenges by providing a unified architectural and governance layer across hybrid environments.

Related Insight: Read our blog on Microsoft Fabric vs Azure Data Stack: Enterprise Choice for 2026 to understand the key capabilities and differences.

How Hybrid data solutions with Microsoft Fabric and Azure enable work?

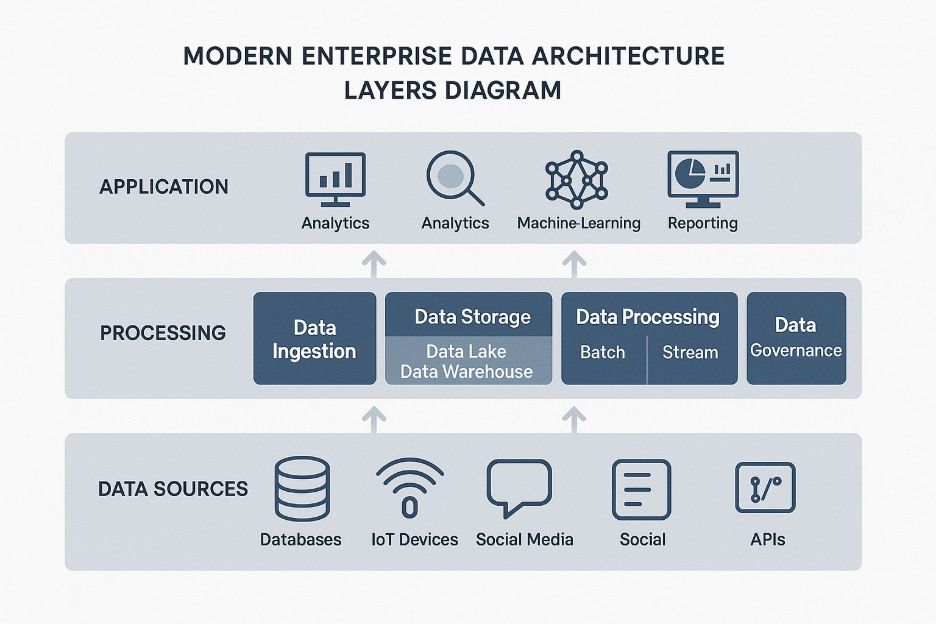

Microsoft Fabric is not simply another analytics tool—it is an architectural abstraction that unifies data engineering, analytics, and governance. When paired with Azure’s hybrid integration capabilities, it becomes a powerful foundation for enterprise hybrid data solutions.

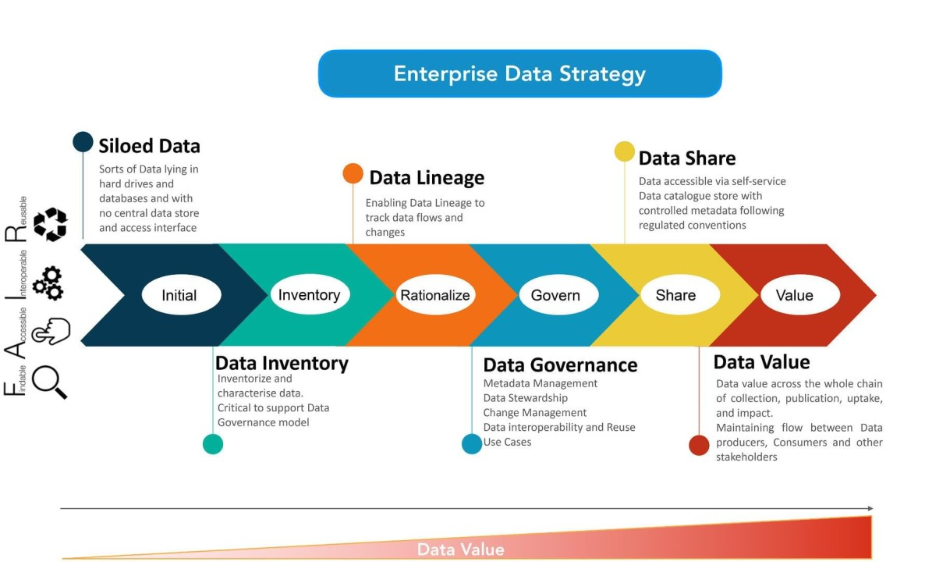

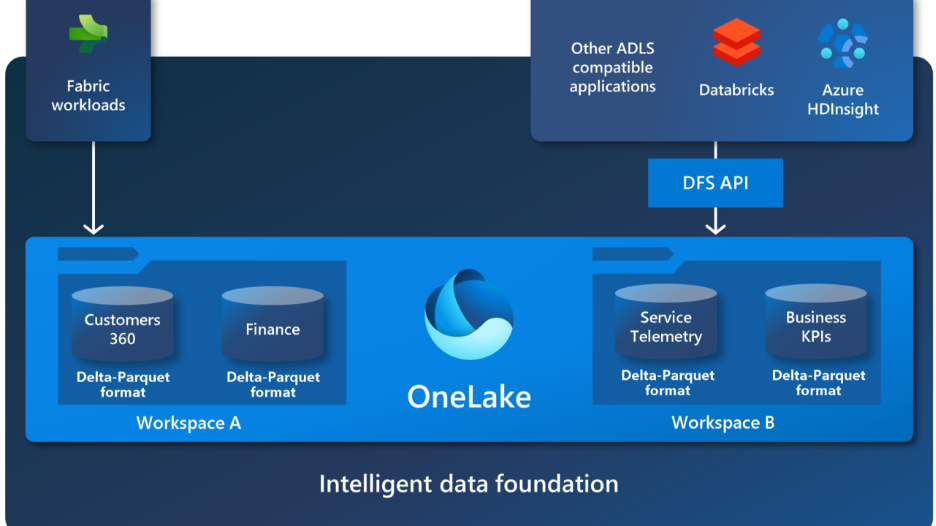

OneLake and unified storage strategy for hybrid analytics

At the center of Fabric is OneLake, a single logical data lake that spans all Fabric workloads. From a hybrid perspective, OneLake acts as the analytical gravity well—where governed, analytics-ready data converges, regardless of its source.

Rather than forcing enterprises to duplicate data across warehouses, marts, and analytics engines, OneLake enables:

- A single copy of data referenced by multiple engines

- Consistent security and governance policies

- Standardized data formats (Delta/Parquet) for analytics and AI

In hybrid data solutions with Microsoft Fabric and Azure, OneLake does not replace on-prem storage. Instead, it becomes the curated analytics layer that synchronizes with on-prem and edge systems through controlled ingestion patterns.

Related Insight: This lakehouse-first strategy aligns with modern analytics architectures outlined in Techment’s CTO guide to Microsoft Fabric architecture for modern analytics.

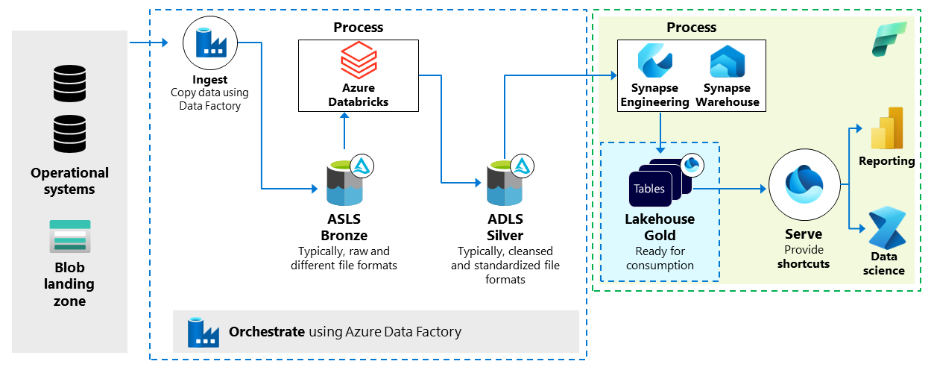

Ingesting on-prem data into Fabric: connectors and Azure Arc

Hybrid ingestion is where many architectures fail. Microsoft Fabric leverages Azure’s mature integration stack to simplify this layer:

- Azure Data Factory in Fabric provides managed connectors for databases, files, and enterprise systems.

- Self-hosted integration runtimes enable secure data movement from on-prem environments without exposing internal networks.

- Azure Arc extends Azure management, identity, and policy controls to on-prem and edge resources, reducing operational fragmentation.

This combination allows enterprises to standardize ingestion patterns across Hybrid data solutions with Microsoft Fabric and Azure rather than building bespoke pipelines per system. Over time, this consistency reduces operational risk and accelerates onboarding of new data sources—an essential capability for scaling analytics programs.

Enabling real-time Hybrid data solutions with Microsoft Fabric and Azure with streaming pipelines

Hybrid data solutions with Microsoft Fabric and Azure is not limited to batch processing. Many enterprises require near-real-time insights from distributed systems.

By integrating Azure Event Hubs, IoT Hub, and Fabric’s real-time analytics capabilities, organizations can design streaming pipelines that ingest edge or on-prem events into Fabric lakehouses with minimal latency.

These patterns support use cases such as:

- Operational monitoring across plants or stores

- Fraud detection and risk analytics

- Customer experience personalization

Crucially, streaming data lands in the same governed Fabric environment as batch data, avoiding the siloed “speed layer” architectures that previously complicated hybrid analytics.

Related Insight: Read our Microsoft Data and AI Partner blog explores the strategic value a Microsoft Data and AI Partner brings to enterprises

Best Practices For Hybrid data solutions with Microsoft Fabric and Azure

No single hybrid architecture fits every enterprise. However, several repeatable patterns have emerged as best practices for hybrid data solutions with Microsoft Fabric and Azure.

Lakehouse-first hybrid pattern for analytics and BI

This pattern is the most common starting point for enterprises modernizing analytics.

Operational systems—ERP, CRM, manufacturing, or financial platforms—remain on-prem or in private clouds. Data is ingested incrementally into Fabric via Azure Data Factory pipelines and landed in OneLake as curated Delta tables.

From there:

- Fabric SQL and Spark engines serve analytics workloads

- Power BI operates directly on OneLake data

- Governance and lineage are enforced centrally

This approach minimizes disruption to operational systems while delivering a modern analytics experience.

Related Insight: Read about phased modernization roadmaps, as described in Techment’s analysis of Microsoft Fabric vs traditional data warehousing.

Edge ingestion and streaming pattern for near-real-time use cases

For latency-sensitive environments, such as manufacturing or logistics, edge systems publish telemetry and events locally. These events are forwarded to Azure Event Hubs or IoT Hub and streamed into Fabric in near real time.

Key characteristics of this pattern include:

- Local processing for immediate operational control

- Centralized analytics in Fabric for trend analysis and optimization

- Unified governance across streaming and batch data

By converging real-time and historical data in OneLake, enterprises avoid maintaining separate analytics stacks for operational and strategic insights.

Multi-region hybrid query pattern for global performance and compliance

Global enterprises often face a dual mandate: comply with regional data residency laws while providing global analytics visibility.

In this pattern:

- Data remains resident in regional environments

- Aggregated or anonymized datasets are synchronized into Fabric

- Global dashboards and AI models operate on compliant views

This hybrid query approach balances compliance with performance and is increasingly relevant as regulatory scrutiny intensifies across jurisdictions.

Begin your transformation journey and automate governance across all platforms with our data solutions.

Data pipeline and orchestration best practices for Hybrid data solutions with Microsoft Fabric and Azure

Hybrid data solutions with Microsoft Fabric and Azure succeed or fail based on pipeline discipline. Poorly designed ingestion and orchestration quickly become the weakest link in enterprise analytics.

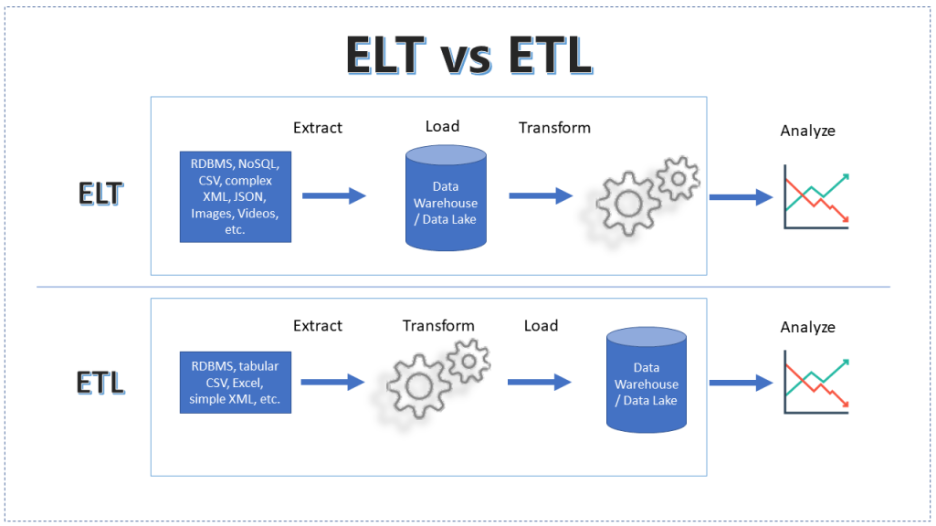

Choosing ELT vs ETL for hybrid pipelines

In modern hybrid architectures, ELT is generally preferred. Raw data is ingested into OneLake with minimal transformation, preserving fidelity and enabling multiple downstream use cases.

ETL still has a role where:

- Data volumes are extreme

- Regulatory filtering must occur before cloud ingestion

- Legacy systems impose transformation constraints

The key is intentionality. Enterprises should avoid defaulting to ETL simply because it is familiar. Fabric’s scalable compute and lakehouse architecture make ELT both performant and governable for most hybrid scenarios.

CDC and incremental load strategies to keep data fresh

Change Data Capture (CDC) is essential for hybrid data solutions with Microsoft Fabric and Azure. Full reloads are rarely sustainable at enterprise scale.

Best practices include:

- Log-based CDC from transactional systems

- Watermark-based incremental loads for files

- Event-driven ingestion for real-time systems

Related Insights: Defining freshness SLAs per data domain ensures that business expectations align with technical realities—a recurring governance theme in Techment’s work on data quality for AI.

Governance and security: protecting hybrid data in Fabric + Azure

Hybrid analytics introduces a governance paradox. Enterprises want centralized control, yet data physically lives across distributed environments. Hybrid data solutions with Microsoft Fabric and Azure resolve this tension by decoupling governance from physical location.

Rather than enforcing governance through fragmented tooling, Fabric and Azure provide a shared control plane for identity, access, policy, and observability—critical for enterprise trust.

Identity best practices: Microsoft Entra and workspace identities

Identity is the foundation of hybrid security. Microsoft Fabric relies on Microsoft Entra ID as the unified identity provider across cloud and hybrid assets. This allows enterprises to apply consistent authentication and authorization policies regardless of where data originates.

Best practices include:

- Workspace-level identity boundaries aligned to domains or business units

- Managed identities for pipelines and services, eliminating credential sprawl

- Least-privilege access models enforced through Entra groups rather than individual users

When combined with Azure Arc, on-prem and edge resources can participate in the same identity and policy framework as cloud-native services. This eliminates one of the most common hybrid failure modes: parallel identity systems with inconsistent controls.

Learn in our blog on Microsoft Azure for Enterprises: The Backbone of AI-Driven Modernization how it provides a cohesive, enterprise-grade ecosystem to integrate infrastructure, data, security, AI, and governance under a unified architectural vision.

Data residency, encryption, and compliance controls

Regulatory requirements increasingly dictate how hybrid data solutions are designed. Fabric and Azure support compliance through layered controls:

- Encryption at rest and in transit using Microsoft-managed or customer-managed keys

- Regional Fabric capacities aligned with residency requirements

- Data minimization and anonymization prior to cross-region synchronization

A critical architectural principle is policy-driven data movement. Rather than relying on developer discretion, enterprises should encode residency and compliance rules directly into ingestion and transformation pipelines.

This ensures that as hybrid environments scale, compliance posture remains intact without becoming a manual bottleneck.

Observability, lineage, and auditability across hybrid estates

Trust in analytics depends on transparency. Microsoft Fabric integrates lineage, impact analysis, and usage telemetry across its workloads. When paired with Azure governance tooling, enterprises gain:

- End-to-end lineage from on-prem source to executive dashboard

- Visibility into data freshness, failures, and SLA adherence

- Audit trails for regulatory and internal review

This level of observability is especially important for AI and advanced analytics, where opaque pipelines can quickly undermine executive confidence. Techment frequently sees governance maturity as the inflection point between experimental analytics and enterprise-scale adoption.

Related Insight: Microsoft Fabric Architecture: A CTO’s Guide to Modern Analytics & AI

Cost, performance, and operational playbook

Hybrid architectures introduce more moving parts—but they also offer more levers for optimization. Enterprises that treat cost and performance as first-class architectural concerns consistently outperform those that optimize reactively.

Cost control: data lifecycle and compute strategies

In hybrid data solutions with Microsoft Fabric and Azure, cost optimization begins with data lifecycle management.

Effective strategies include:

- Hot, warm, and cold data tiers mapped to business value

- Retaining raw historical data on lower-cost storage while curating analytics-ready datasets in OneLake

- Using capacity-based planning in Fabric to align spend with workload criticality

Hybrid environments also enable selective modernization. Not every dataset needs premium cloud compute. Enterprises can prioritize high-value analytics while maintaining cost-effective storage for compliance or archival data.

This disciplined approach mirrors best practices Techment outlines in its guidance on enterprise analytics modernization and cost governance .

Performance tuning: partitions, materialized views, and indexes

Performance tuning in Fabric is most effective when aligned with access patterns rather than raw data volume.

Key techniques include:

- Partitioning large tables by time or domain attributes

- Leveraging materialized views for frequently queried aggregations

- Using indexing selectively to accelerate BI and SQL workloads

In hybrid contexts, reducing data movement is often the most impactful optimization. Bringing compute closer to data—or synchronizing only what is required—improves both performance and cost efficiency.

Discover how we integrated Azure services for efficient data ingestion, transformation, and reporting, ensuring scalability and reliability through our case study.

Migration checklist & pilot plan for hybrid Fabric projects

Enterprises rarely modernize hybrid analytics in a single leap. Successful programs follow phased rollouts with measurable outcomes.

30-60-90 day pilot scope and success metrics

First 30 days: Foundation

- Identify 1–2 high-value hybrid use cases

- Establish secure connectivity and identity integration

- Land initial datasets into OneLake

Days 31–60: Expansion

- Implement incremental ingestion and CDC

- Enable BI and exploratory analytics

- Apply governance and lineage controls

Days 61–90: Optimization

- Tune performance and cost

- Expand to additional data domains

- Define enterprise rollout standards

Success metrics should extend beyond technical delivery. Leading indicators include reduced time-to-insight, improved data trust, and increased adoption across business teams.

This phased approach aligns with Techment’s proven readiness frameworks used across Fabric and Azure modernization engagements.

For deeper context on transforming enterprise data systems before AI deployment, explore our data engineering services.

Case study: hybrid analytics modernization in a global enterprise

Scenario (hypothetical but representative):

A global manufacturing enterprise operates plants across North America, Europe, and Asia. Core operational systems run on-prem for latency and resilience reasons, while corporate analytics teams push for advanced forecasting and AI-driven optimization.

Challenge:

- Fragmented analytics stacks per region

- Limited visibility into global performance

- Regulatory constraints preventing raw data centralization

Architecture approach:

- On-prem systems publish incremental data to Azure via secure integration runtimes

- Curated datasets land in Microsoft Fabric OneLake

- Regional data remains local; aggregated views support global analytics

- Power BI and Fabric analytics serve both plant managers and executives

Outcomes:

- 40% reduction in analytics delivery time

- Consistent governance across regions

- Foundation established for AI-driven demand forecasting

This case reflects a common pattern: hybrid data solutions with Microsoft Fabric and Azure enable modernization without forcing disruptive re-platforming.

Explore how enterprise reliability improves with governance-forward architecture in our data governance solution offerings.

How Techment helps enterprises succeed with hybrid data solutions

Designing and operating hybrid analytics platforms requires more than tooling—it demands architectural discipline, governance maturity, and execution experience.

Techment supports enterprises across the full hybrid data lifecycle:

- Hybrid data strategy and architecture design aligned to business outcomes

- Microsoft Fabric readiness assessments and roadmap development

- Azure and Fabric implementation spanning ingestion, lakehouse, BI, and AI

- Governance, security, and compliance frameworks using Fabric and Azure-native controls

- Operational optimization for cost, performance, and scale

Rather than treating Fabric as a standalone platform, Techment positions it as a strategic enabler within broader data and AI operating models. This integrated approach helps enterprises move from hybrid complexity to analytics clarity.

Related Insight: Is Your Enterprise AI-Ready? A Fabric-Focused Readiness Checklist

FAQs — quick answers for decision makers

Can you query on-prem data directly from Microsoft Fabric?

Direct querying is limited. Best practice is controlled ingestion into OneLake using secure pipelines to ensure performance, governance, and scalability.

Is Microsoft Fabric suitable for regulated industries?

Yes. When combined with Azure identity, encryption, and residency controls, Fabric supports stringent compliance requirements.

How long does a hybrid Fabric implementation typically take?

Initial pilots often deliver value in 60–90 days, with enterprise-scale rollouts phased over several quarters.

Does hybrid analytics slow down AI adoption?

When designed correctly, hybrid architectures accelerate AI by improving data quality, governance, and accessibility.

Conclusion

Hybrid is not a temporary architecture—it is the long-term reality for enterprise data. Hybrid Data Solutions with Microsoft Fabric and Azure provide a cohesive way to modernize analytics while respecting operational, regulatory, and economic constraints.

By unifying storage, analytics, governance, and identity across hybrid environments, Fabric enables enterprises to shift focus from integration complexity to business insight. The organizations that succeed will be those that treat hybrid analytics as a strategic platform decision, not a tactical workaround.

For enterprises navigating this transition, a thoughtful architecture, disciplined governance, and an experienced partner make all the difference. Techment stands ready to help organizations design, implement, and optimize hybrid data solutions that scale with ambition—not technical debt.