In the age of AI-driven decision-making and data-powered innovation, the true competitive edge lies not just in how much data a company holds—but in the quality of that data. Training powerful machine learning models or drawing insights from dashboards without a Data Quality Framework for AI and Analytics is like building on sand—fragile, unstable, and bound to collapse. Whether you’re training advanced machine learning models or unlocking business intelligence through analytics, your outcomes are only as strong as the foundation they rest on. That foundation? A robust Data Quality Framework (DQF).

In this guide, we’ll explore how implementing a comprehensive Data Quality Framework for AI and Analytics transforms raw information into a strategic asset, ensuring your analytics deliver actionable insights and your AI models perform with precision. Let’s dive into the essential components that make data quality the cornerstone of successful digital transformation.

Find below sections for your reading:

-

- How Poor Data Quality Impacts Enterprises?

- Why Is a Data Quality Framework Important?

- How Better Data Quality Translates to Better AI and Analytics

Understanding the significance of a Data Quality Framework for AI and Analytics can lead to improved business outcomes and strategic advantages.

- Strategic Practices to Elevate Data Quality Across the Enterprises.

- How We Can Help You Build a Strong DQF

- Conclusion: Don’t Let Poor Data Quality Derail Your Innovation

How Poor Data Quality Impacts Enterprises?

Data quality is the cornerstone of reliable decision-making and operational efficiency. It reflects how well your data aligns with defined standards and expectations. Yet, many organizations grapple with subpar data—often referred to as ‘data debt’—which accumulates over time and undermines the value of their data assets.

Leaders across industries now recognize that poor data quality isn’t just a technical glitch—it’s a strategic liability. Gartner reports that poor data quality costs organizations an average of $12.9 million annually. In industries like healthcare, manufacturing, and finance, the consequences are even more severe: misdiagnosed patients, faulty demand forecasts, and compliance penalties.

IBM estimates that bad data costs the U.S. economy over $3.1 trillion annually, affecting everything from supply chain efficiency to customer satisfaction and compliance. With stakes this high, organizations can no longer afford to treat data quality as an afterthought or technical detail. A well-designed Data Quality Framework isn’t just about cleaner datasets—it’s about protecting your bottom line and ensuring every data-driven decision moves your business forward with confidence.

Why Is a Data Quality Framework Important?

A Data Quality Framework is a set of principles, practices, and tools designed to ensure that data is accurate, complete, consistent, timely, and relevant for its intended use.

At its core, it’s about answering critical questions:

- Is this data correct?

- Is it complete?

- Is it coming in on time?

- Can it be trusted across systems and teams?

A modern DQF integrates policy (governance), process (data validation, enrichment), people (stewards, analysts), and platforms (AI/ML-driven monitoring tools).

How Better Data Quality Translates to Better AI and Analytics

AI models are incredibly sensitive to data noise. Biases in data collection, incomplete records, or labeling errors can lead to misleading insights or unethical outcomes. For instance:

- A healthcare algorithm trained on poor demographic data might misdiagnose certain populations.

- A fraud detection system may falsely flag loyal customers due to historical inaccuracies.

By embedding data quality gates before model training, organizations can drastically improve model precision, recall, and real-world generalizability. Many organizations face misdiagnosed patients or compliance penalties due to poor data quality—just as one healthcare provider did before partnering with us to migrate and clean over significant volume of unstructured, fragmented patient data.

Improved Analytics Accuracy and Decision-Making

Inaccurate dashboards lead to misguided strategy. With a strong DQF, business leaders can:

- Trust the metrics in real time.

- Slice and dice data without fearing inconsistencies.

- Build predictive models with reliable historical data.

Faster Time-to-Insight

Quality data reduces the need for endless cleaning and back-and-forth between teams. Your analysts spend less time firefighting and more time uncovering patterns, correlations, and opportunities.

At Techment, we’ve helped clients significantly improve data quality by engineering scalable, automated data transformation pipelines. For a U.S.-based tech and marketing company struggling with fragmented and legacy-stored data, we built an Azure-based ETL framework integrated with Power BI. This solution not only centralized over 1200 disparate data tables but also automated validation and scheduling—enhancing data accuracy, trust, and visibility across departments. The result? Faster access to reliable insights, improved cross-functional decision-making, and a future-ready foundation for AI and analytics initiatives.

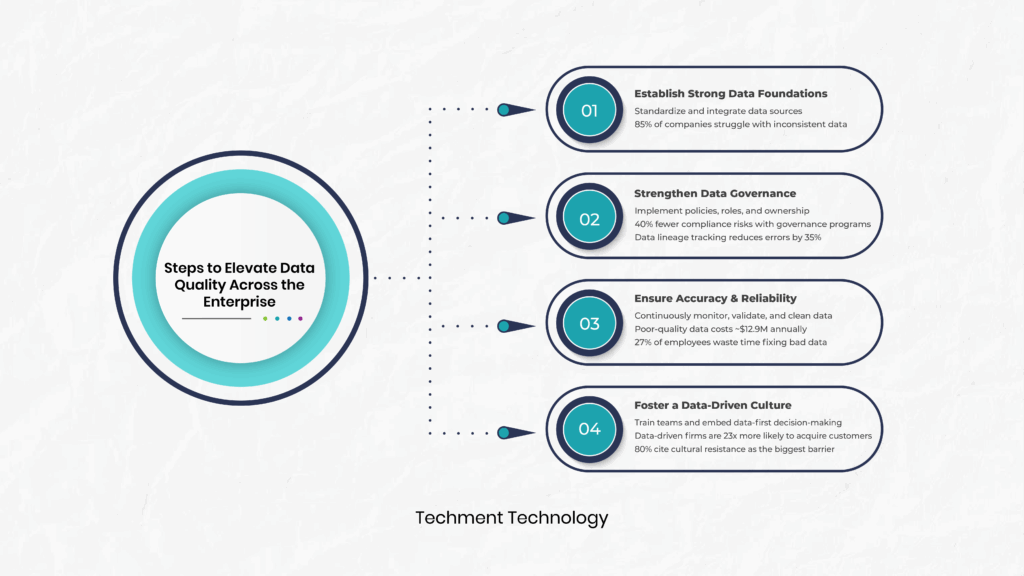

Strategic Practices to Elevate Data Quality Across the Enterprise

At its core, data quality is not a technical checkbox — it’s a business enabler. Whether it’s reducing churn, accelerating time-to-insight, or ensuring regulatory compliance, trusted data underpins every key decision. Building a strong Data Quality Framework is not about perfection, but about creating an agile, scalable, and business-aligned approach to managing data as a valuable asset.

Start with Business Impact

Before implementing technical solutions, map data quality issues to business outcomes. Identify how inaccurate customer data affects service delivery or how incomplete product information hampers sales effectiveness. This business-first approach secures executive support and ensures your DQF aligns with organizational priorities. Accelerating value from data doesn’t require multi‑year roadmaps—it begins with aligning strategic data initiatives to real business drivers. At Techment, we follow this incremental but strategic approach—aligning quality metrics with key KPIs, launching rapid data discovery assessments, and crafting frameworks that grow with your organizational maturity.

Define “good enough” standards for your organization—data quality improvement isn’t about perfection but enabling confident decision-making.

Establish Clear Standards and Governance

Create enterprise-wide data quality standards focusing on five critical dimensions: Accuracy, Completeness, Consistency, Timeliness, and Uniqueness. Set specific metrics and thresholds for each dimension while allowing flexible implementation across business units with varying maturity levels. Understand your current data landscape by taking our Data Discovery Assessment Services to evaluate data across systems. Customized to your priorities, the assessment surfaces hidden quality issues and provides a remediation roadmap aligned with business KPIs.

Build a Data Council that bridges technical stewards and business stakeholders. Assign clear ownership to data domains and establish data steward roles with specific responsibilities for monitoring and escalating quality issues throughout the data lifecycle.

Implement Proactive Monitoring

Deploy automated data profiling tools like Great Expectations or Talend to detect anomalies early. Create real-time DQ dashboards that show current status and historical trends, enabling stakeholders to track improvements and plan proactively. For one of our U.S. technology client, we implemented automated validation and real-time dashboards for helping monitor quality trends and eliminate data errors before they reach analytics.

Establish feedback loops that systematically address issues and feed learnings back into data pipelines. Empower end-users to flag quality problems through crowdsourced initiatives.

Embrace Trust-Based Models

Modern enterprises rely on both internal and external data sources. Shift from absolute “truth-based” models to “trust-based” frameworks that consider data origin, governance, and reliability when determining suitability for decision-making. Implement mitigation measures for lower-trust data sources.

Integrate Quality into DevOps

Make data quality checks mandatory “stage gates” in release management. Integrate quality validation into CI/CD pipelines and leverage modern architectures like Data Mesh or Lakehouse platforms that decentralize quality management while maintaining enterprise standards.

Communicate Value Continuously

Measure and report quality improvements in business terms. Demonstrate how data quality enhancements correlate with improved customer responsiveness, reduced costs, and better decision-making. Make DQ a regular governance board agenda item, presenting progress in language that emphasizes business impact. “By mapping poorly structured patient records directly to clinical outcomes and analytics readiness, our healthcare client was able to reduce manual processes and accelerate care decisions

Connect with industry peer groups and vendor communities to exchange best practices and accelerate organizational maturity.

How We Can Help You Build a Strong DQF

At Techment, where we help enterprises leverage data for innovation, we’ve seen firsthand how poor-quality data stalls digital transformation, dilutes AI accuracy, and weakens customer experiences. Conversely, a strong DQF accelerates time to insight, reduces operational risks, and increases trust across the enterprise.

At Techment, we believe in taking a business-aligned, technology-agnostic approach to data quality. We partner with clients to:

- Assess current data health using proven maturity models.

- Design frameworks tailored to industry and compliance needs.

- Implement tools and processes for continuous improvement.

- Monitor and evolve the framework to keep pace with changing business objectives.

Whether you’re building a data warehouse, migrating to the cloud, or launching AI initiatives, data quality is the lever that multiplies value.

Conclusion: Don’t Let Poor Data Quality Derail Your Innovation

Today, data is more than a resource—it’s the backbone of digital innovation. But without quality, even the most sophisticated analytics or AI initiatives are bound to fail. For leaders focused on growth, agility, and differentiation, building a strong Data Quality Framework isn’t a technical choice—it’s a strategic imperative.

All Posts

All Posts